Single Headed Attention RNN

Overview

- The goal is to build a simple language model that can run in a single GPU and still do well.

- Due to above, the goal is to avoid Transformer architectures and see if we can use the traditional LSTM.

Architecture

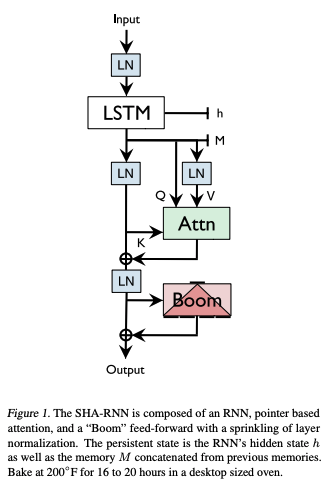

- The model consists of trainable embedding later, a single headed attention LSTM layer followed by a dense softmax layer.

- The weights from trainable embedding layer and the dense softmax layers are shared.

Single Headed Attention

- Similar to transformers’s MHA but just 1 head :P

Boom Layer

- 2 feed-forward layers with GELU (project big and then back).

Results

| Model | Bits Per Char |

|---|---|

| LSTM | 1.182 |

| SHA-RNN | 1.100 |

| Adaptive Transformer | 1.04 |

Kaushik Rangadurai

Code. Learn. Explore