Pangloss: Fast Entity Linking in Noisy Text Environments

Overview

- Entity Linking is the task of mapping potentially ambiguous terms in text to their constituent entities in a KB like Wikipedia.

- Pangloss is entity disambiguation for noisy text.

- Combines a probabilistic linear-time key phrase identification algorithm with a semantic similarity engine based on context-dependent document embeddings.

- Entity Linking identifies a phrase (or surface form) in a text and links it to an entity in a KB.

- Challenges include synonymy (multiple ways to say a cup of coffee) and polysemy (Tesla means both man and machine).

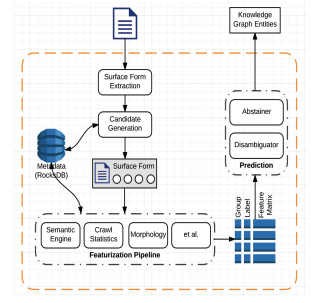

Architecture

Datasets

- Wikipedia, Wikipedia PageViews, LOD Wikilinks, Common Crawl and Workplace Chat.

Surface Form Extraction

- NER doesn’t work for workplace chat (bad grammar and syntax).

- Apply segphrase - can also use probability of a phrase being a link in Wikipedia.

Candidate Generation

- Given a (surface form, text) pair, one approach is to do a multi-class classification on the entities. However, this doesn’t work well in practice (need lot of data).

- Use anchor text from links as a way to reduce the number of candidates being considered.

Joint Embedding

- Create embeddings for surface form text and KB entities in the same space.

- Convert power steering to power_steering to compute embeddings for surface form text.

- For entity embeddings, insert a token (uri:wiki/Wintermute) following the anchor text linking to that article.

Disambiguation Features

- Semantic Similarity - TF-IDF weighted embedding, use HDBSCAN for clustering similar entity forms.

- Anchor Text - Read More on this.

- Popularity - the number of inbound links, the total number of page views each entry receives, the number of pageviews via redirect pages, and the number of outbound links (total and unique).

- Morphology - Levenshtein distance.

LTR & Abstaining

- (Surface Form, Passage, Entity) -> True/False

- Performance is improved by employing a pairwise preference algorithm that learns a model to determine which of two training examples should be ranked higher. Pairwise XGBoost with cross-validated hyperparameters for gamma, learning rate, number of estimators, max depth, and minimum child weight yields the best relevance versus runtime performance.

- The abstain model can only improve precision.

- Abstain Model - given (surface form, text) can this be meaningfully linked?

Kaushik Rangadurai

Code. Learn. Explore